You notice your team needs a software solution to scale and do your tasks more efficiently. What’s the first thing you do?

I bet you consult customer reviews from the top software review sites — like 84% of B2B buyers.

And yet, 61% of small business owners in the U.S. have buyer’s remorse over a technology purchase. Even worse: 60% of technology buyers involved in renewal decisions regret every purchase they make.

Yikes.

Aren’t reviews supposed to help us avoid buying the wrong tool? What gives?

The problem is most software buyers know they should read user reviews, but they don’t know how to evaluate them well.

In this article, you’ll learn:

- How to spot untrustworthy reviews (five signs you should look out for)

- How to find authentic customer reviews (seven handy ways to comb through testimonials)

- How to read reviews (like a seasoned pro)

5 signs you should not trust a review

Half of review mining as a buyer is spotting and ignoring unhelpful reviews. Here are five red flags you should watch out for. 🚩

1: The reviewer’s profile or use case doesn’t match yours

Many tools meet the needs of individuals, small business owners, and enterprise customers alike — especially if the software is an OG darling of their category.

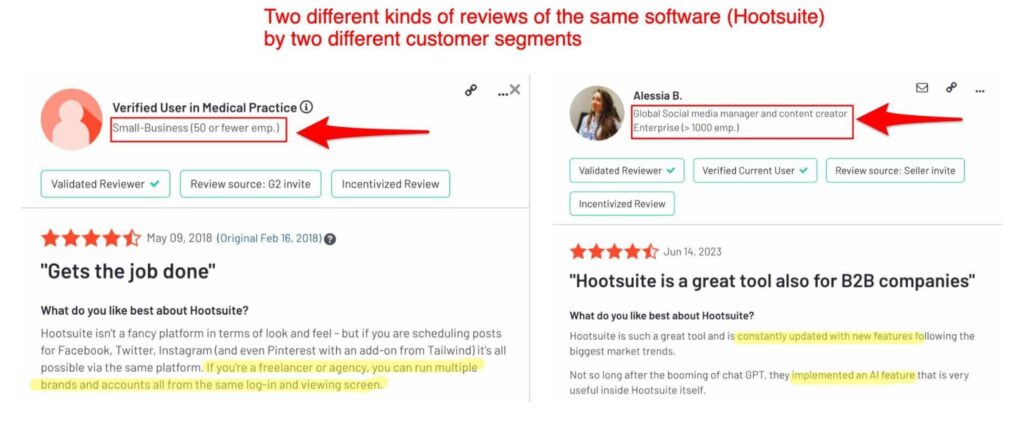

However, the use case for each type of customer is different. Take the social media management tool, Hootsuite. It has plans to fit the needs of an independent freelance social media strategist and an enterprise organization with a dedicated social team. But the former needs vastly different features than the latter.

- A freelance social media strategist juggling various clients likes Hootsuite because it can accommodate many accounts of a single social media platform.

- An enterprise company is a Hootsuite fan because of its in-depth analytics and ability to connect to a wide variety of social media platforms.

It’d be a waste of time for an enterprise customer to read an independent contractor’s review of the same tool and vice versa. No wonder “reviews relatable to me” is the most important evaluation factor on review sites for software buyers, according to TrustRadius’ latest Buyer Disconnect Survey.

But how do you find reviews that are relatable to you? By ensuring the reviewers whose opinions you consider have a profile and need that matches yours. While scrolling, be mindful if:

- The profile of the reviewer matches yours — like job title and industry

- The use case is consistent with your needs

- The company size is similar to yours

Priyamvad Rai, a film editor who often purchases editing software, agrees. In his domain, different tools do different things well. So, his process involves asking whether the software in question does what he needs well.

“For example, Adobe Premiere Pro is great for motion graphics, whereas AVID Media Composer is best for long format films, web series, etc. In this sense, I see HOW the person used the software and whether it aligned with the way I intended to use it.”

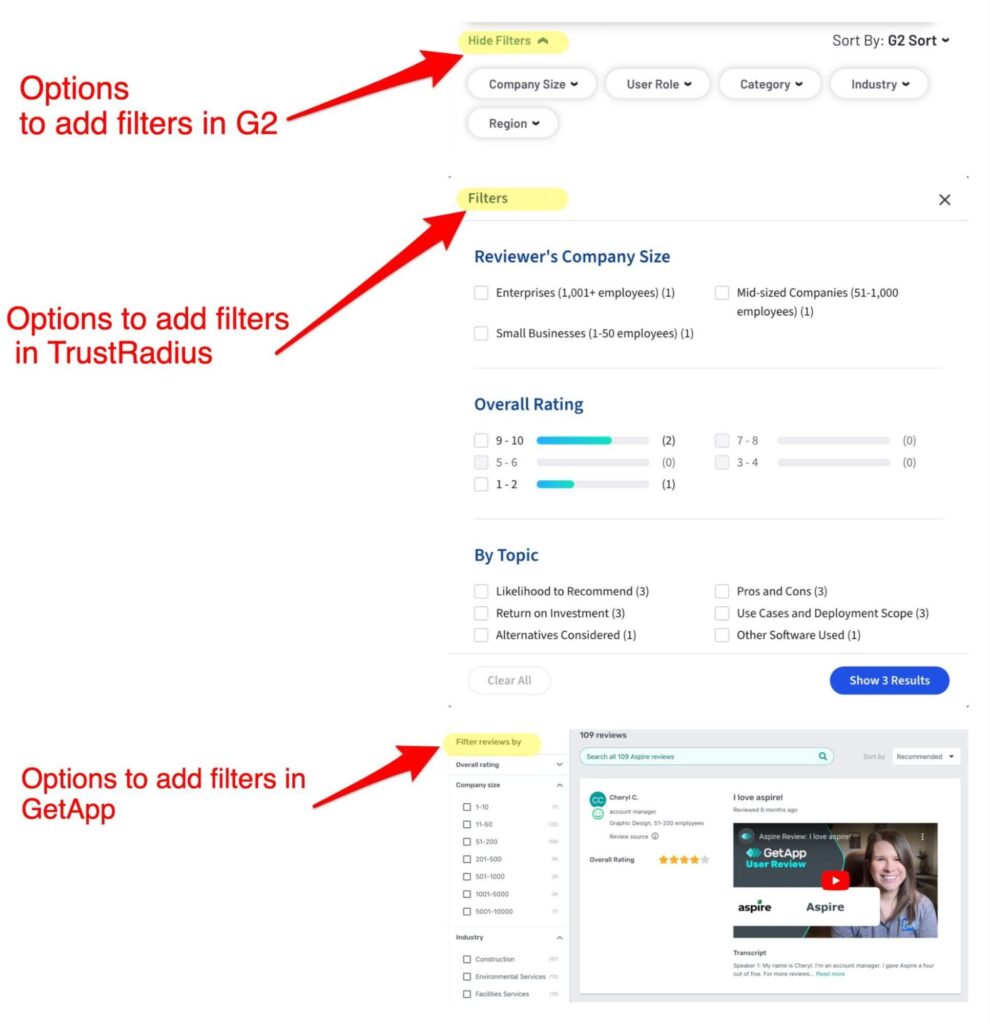

Luckily, it’s simple to filter reviews to some extent — based on your needs and company size. Find the “more filters” option on review sites to enter your details, and you’ll only see testimonials from reviewers who match your criteria.

2: The review is extremely positive or negative

An extreme review — whether it’s positive or negative — may stem from an emotionally heightened response or an isolated incident.

A buyer might leave a negative review because:

- They might be a competitor wanting to sway the market’s perspective

- They want to get the attention of an unresponsive customer service team

- They didn’t do their due diligence and found the product didn’t have a feature they needed

On the flip side, someone might leave a first class review because:

- They were paid to do so by the company

- The marketers of a vendor only asked the happy customers to leave a review

- They were offered a product discount in exchange for a biased positive review

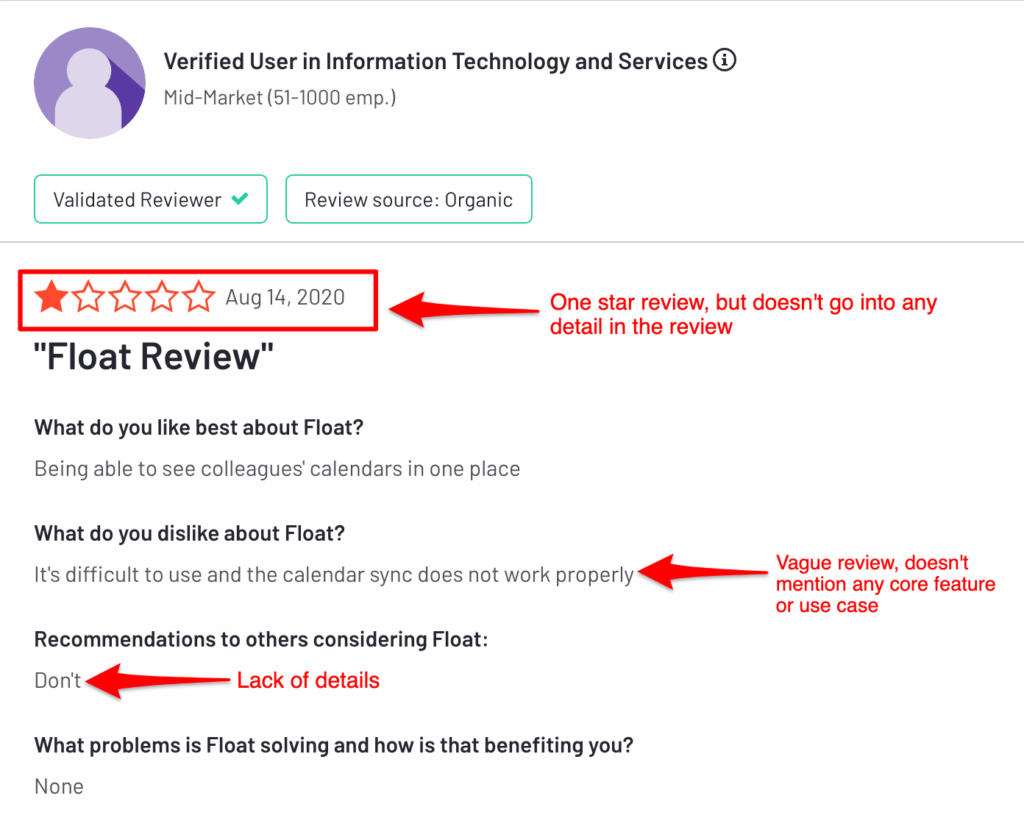

In both cases, you don’t want to give a ton of importance to one-star and five-star reviews. Take, for example, a review of the resource management company, Float:

It’s a one-star review, but it doesn’t explain why the reviewer didn’t like Float and why they’d not recommend it. What’s more, it’s anonymous. Ding, ding, ding — it’s an untrustworthy review.

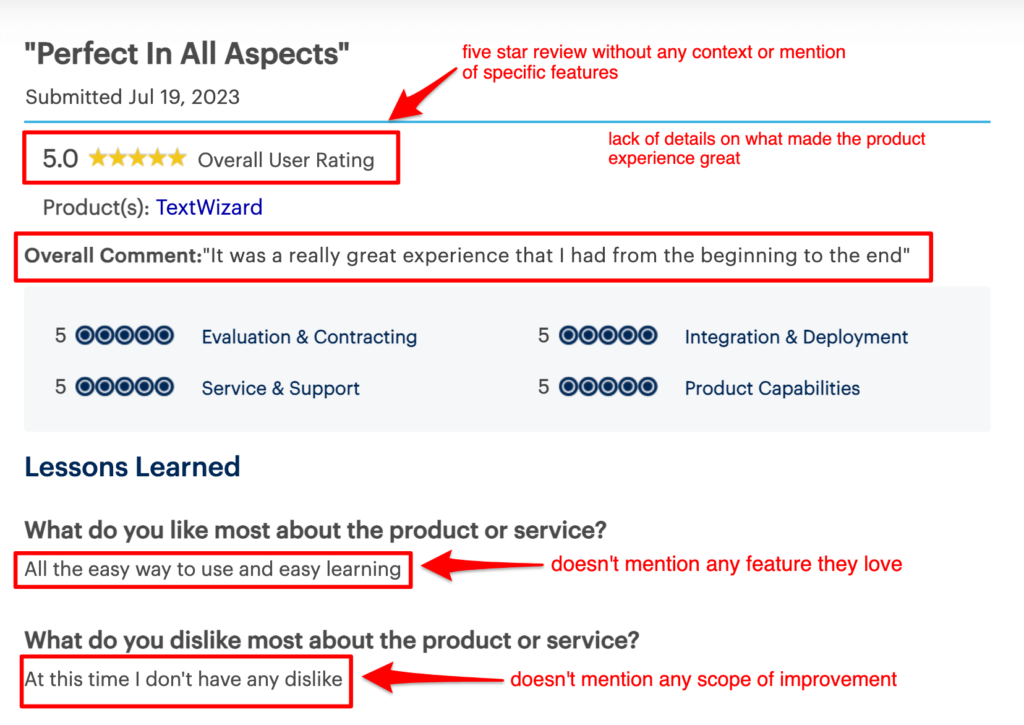

On the opposite spectrum, five-star reviews without any context or details are also unhelpful. Take this review for AI writing software, TextWizard, which doesn’t share any specific features the reviewer loved or any other specifics that made them purchase and stick to the tool.

Tarun Agrawal, VP of Business at Mailmodo, also considers any review (no matter whether it’s one-star or five-star) that doesn’t share any scope of improvement untrustworthy. He recommends looking at the depth of each review to determine whether it’s fake or real.

“If someone says they find no issues at all in the tool it is most likely to be a paid review as it is difficult to solve for all the use cases from a single tool.”

So, the bottom line is you can consider polarized reviews if they’re specific and share in-depth information about why the reviewer hated or loved a tool. TrustRadius even includes this in their scoring algorithm, giving more weight to in-depth reviews.

⚠️ Remember: If many reviews are one-star or five-stars, it’s unlikely to be an isolated incident. Dig deep to understand the context behind each rating. If all one star rating reviews complained of the same thing, for instance, it’s likely a real issue.

3: The review is older than two years

Remember in 2020 when limited tools had an AI of their own? Before every tool started to have either a ChatGPT integration or a “world-class” native AI feature? Good times.

What’s two years in the real world is five years (or more!) in technology years. Things move and evolve fast.

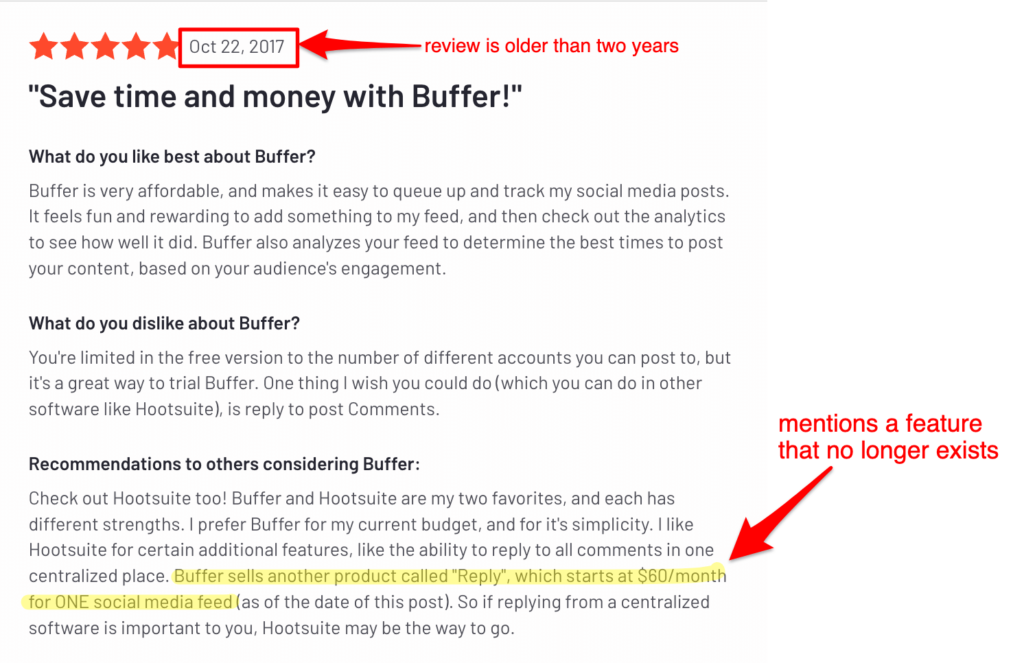

Filter for reviews in the “most recent appear first” order. Otherwise, you might be left evaluating testimonials mentioning features that no longer exist. If you read the following review while purchasing the social media scheduling tool, Buffer, you’d be reading about a Reply feature that no longer exists.

The above review is kind enough to mention, “as of the date of this post,” but most old reviews won’t give you that luxury.

A good rule of thumb: If it’s older than two years, it’s no longer relevant to your purchasing decision.

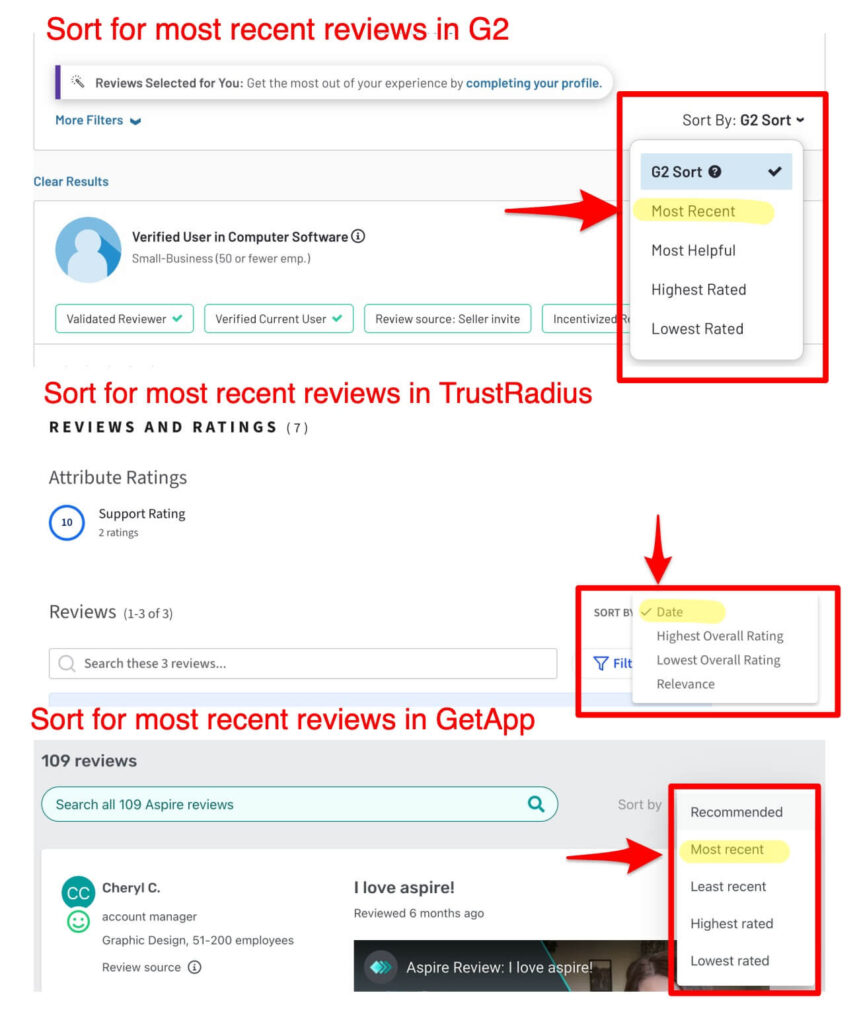

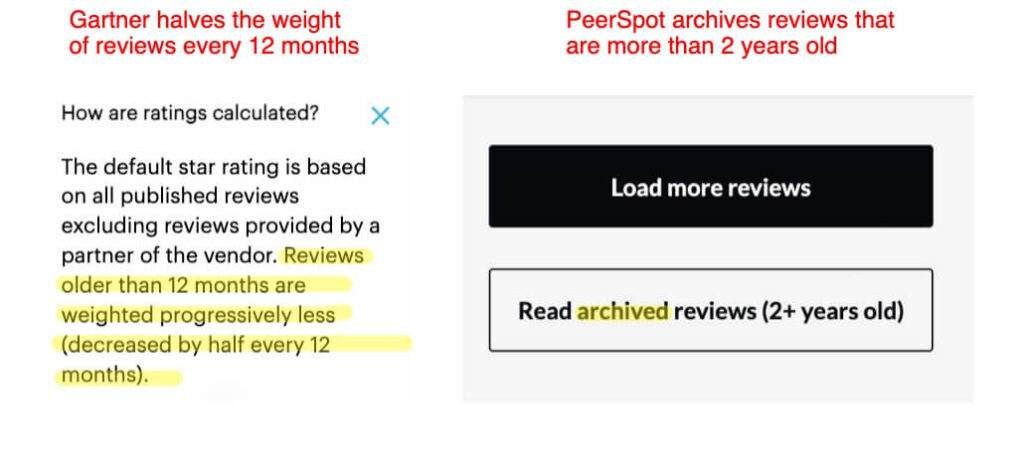

Thankfully, it’s easy to filter for the most recent reviews on almost all review sites. Look for the “sort by” option at the top.

Many review sites make filtering out for recency even easier for you by tweaking their ratings calculations or not displaying older reviews. For example, PeerSpot — automatically archives reviews older than two years and Gartner Peer Insights decreases the weight of each review by half every 12 months.

Growth marketer, Abhi Bavishi, also recommends checking “whether the reviews are spaced-out between dates.” Why? Many companies might pay people or ask customers to review their software on popular review sites in bulk. This results in a lot of positive reviews crowding the “recent” filter — all of which might not stand the test of time.

⚡ Pro-tip: Don’t take the two-year rule as the be-all-end-all for each and every software. For some categories (like social media management), even a one year old software review might contain some outdated information. In other categories (like legal practice management software), things might not move as fast to become old news in only two years.

4: The review is a list of product features without context

Found a review for a tool that sounds like it’s ripped out of its marketing page? No, thank you.

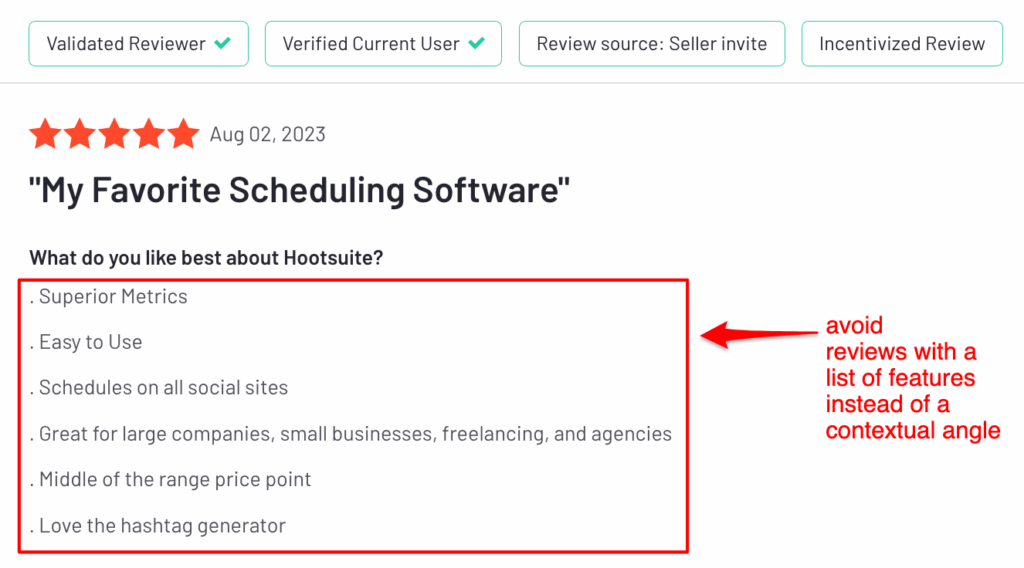

Take the following review for Hootsuite:

It’s a list of features you can also find from Hootsuite’s marketing page — there’s no context or insight here. These kinds of reviews might be paid or posted simply because the company was asking a customer for a testimonial.

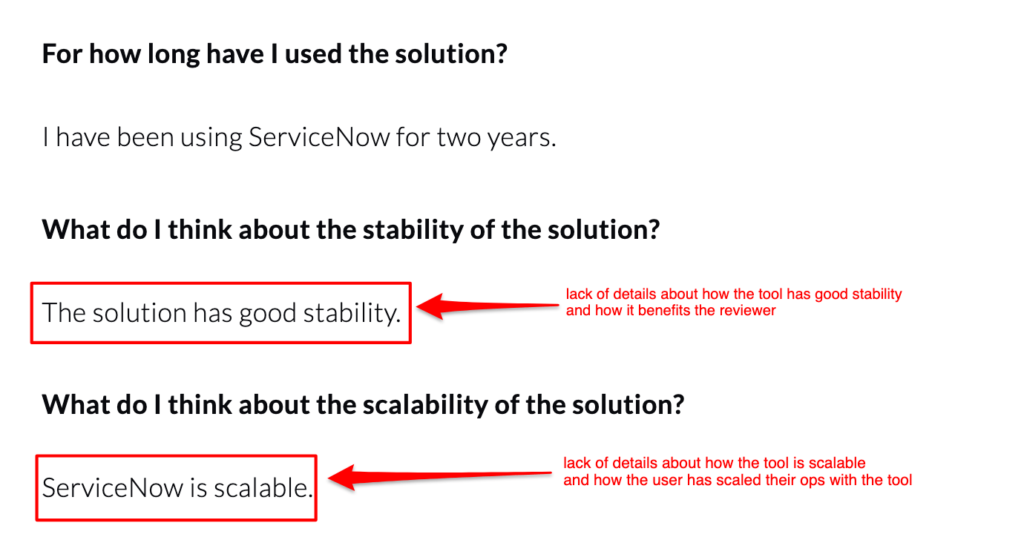

Another type of review that’ll be unhelpful is one that answers questions vaguely without going into any detail or experience. For example, this review of the IT operations management solution, ServiceNow, just answers the questions as yes or no without adding any context or details.

The text here won’t provide you with any useful information anyway. Don’t give a lot of weight to the star rating either.

5: The reviewer has only left a handful of extremely negative or positive reviews

Most review sites allow you to inspect the profile of a reviewer. Check how many reviews a person has written.

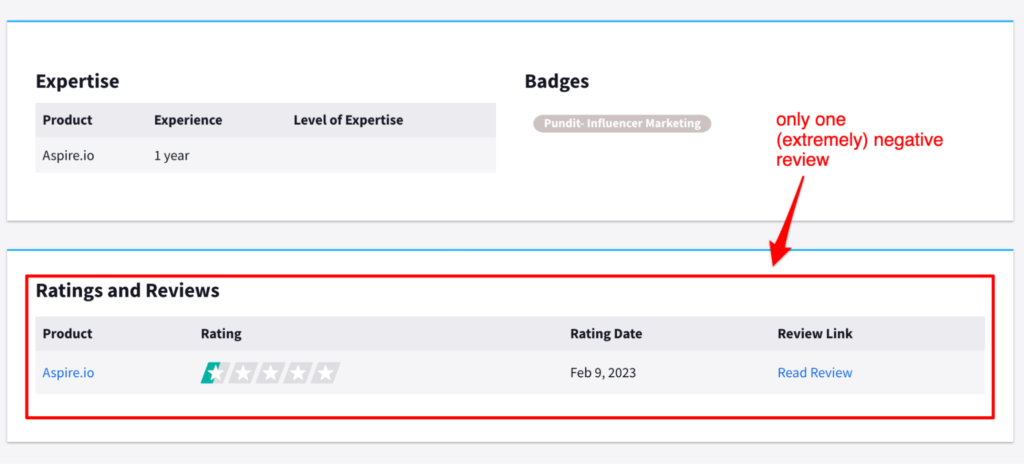

For example, I read an extremely negative review about the influencer marketing platform, Aspire on TrustRadius. But when I clicked over the reviewer’s profile, I noticed this is the only review they’ve ever left.

Many times, a buyer might leave a review only when they have an extremely positive or negative experience. This doesn’t mean that their review doesn’t have any truth to it, but the reviewer’s experience might be an anomaly or a bit too emotionally charged — so take it with a grain of salt.

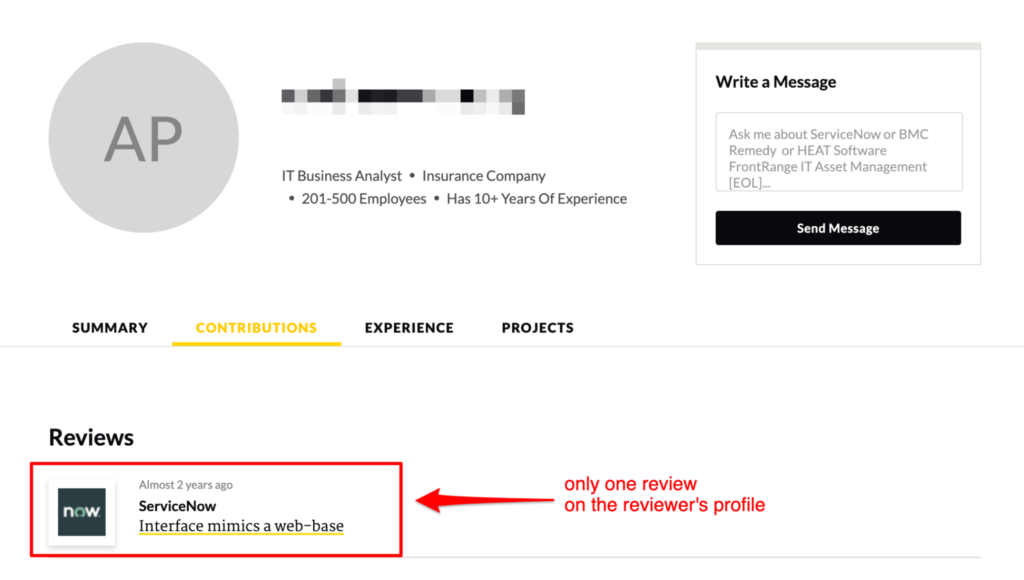

What if they’ve left only one review but it’s not an extreme one-star rating? For example, I found one PeerSpot reviewer who gave a tool five stars but never wrote any other review for any other software.

You can consider this review, but like before, it should also not be given too much weight in your decision-making process. Why? These reviews — especially if they aren’t anonymous — are most likely provided because the company requested a review. The organization might have given talking pointers and asked at the right time (when the buyer’s mood is positive toward the software) — which can skew the review’s authenticity.

Enough chit-chat about what kinds of testimonials to run away from. Let’s discuss ways to find reviews to run toward.

7 ways to find authentic reviews

How do you practice review mining as a buyer to avoid regretting your purchase? Here are seven methods to seek out trustworthy reviews:

1: Filter for median reviews

The easiest way to avoid polarized perspectives? Proactively hunt for three-star reviews.

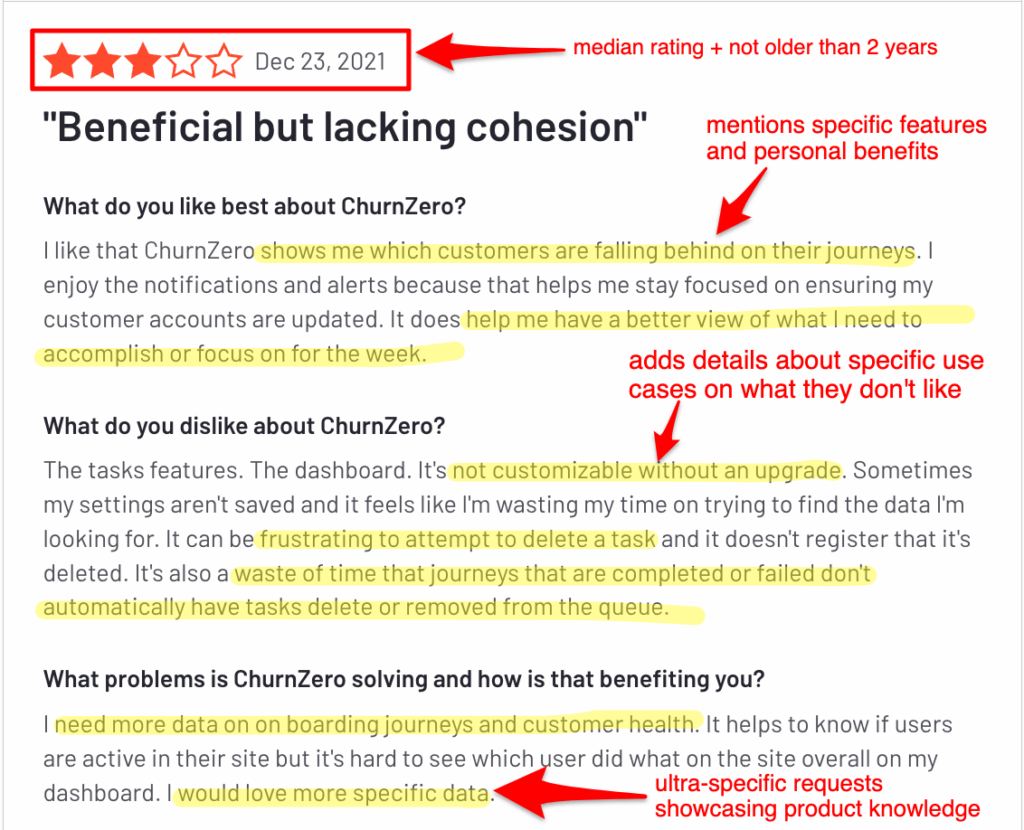

Median reviews are likely to mention the positives and the negatives of any tool. For instance, take the following review of the customer success platform, ChurnZero:

The reviewer specifically mentions the features they love and the functionalities they dislike. The testimonial is balanced and specific. You can count on this review to be true to its word.

Research suggests that humans proactively seek out extreme reviews because they’re easier for our minds to process. Fight this instinct and filter for three-star, four-star, or two-star reviews. They’ll likely be balanced and honest.

Many review sites — like G2 — also round off half-star ratings when you apply filters. For example, four-star filters will yield both 3.5-star and four-star reviews. Combing through two-star and four-star reviews (along with three-star reviews) will give you a more accurate picture.

Not to mention: Many vendors will only have a few hundred reviews, unless they’re a tech giant like HubSpot. This means the number of quality reviews you can examine will narrow significantly when you start applying filters for reviews that match your use case, aren’t older than two years, and have a three-star rating. In this case too, moving on to four-star and two-star reviews will come to your rescue.

2: Look for reviews that are specific and detailed (beyond star ratings)

Relying on star ratings is helpful when you’re purchasing a dress for a wedding this weekend in a hurry. (Hello, it’s Wednesday already.)

But depending on star ratings alone when you’re buying an expensive software that’s not only used by you, but also your team is a recipe for disaster.

Why? Because most vendors try to increase their review counts and ratings to rank highly on category pages on review sites. They want to grab a spot because many buyers rely on these categorical rankings to decide which are the top tools they should evaluate. And they also know that some review sites use the quantity of reviews and star ratings (to a certain extent) to shortlist vendors for these articles. So, many vendors aim to generate a high quantity of reviews with excellent star ratings, which can reduce the proportion of balanced reviews that buyers seek.

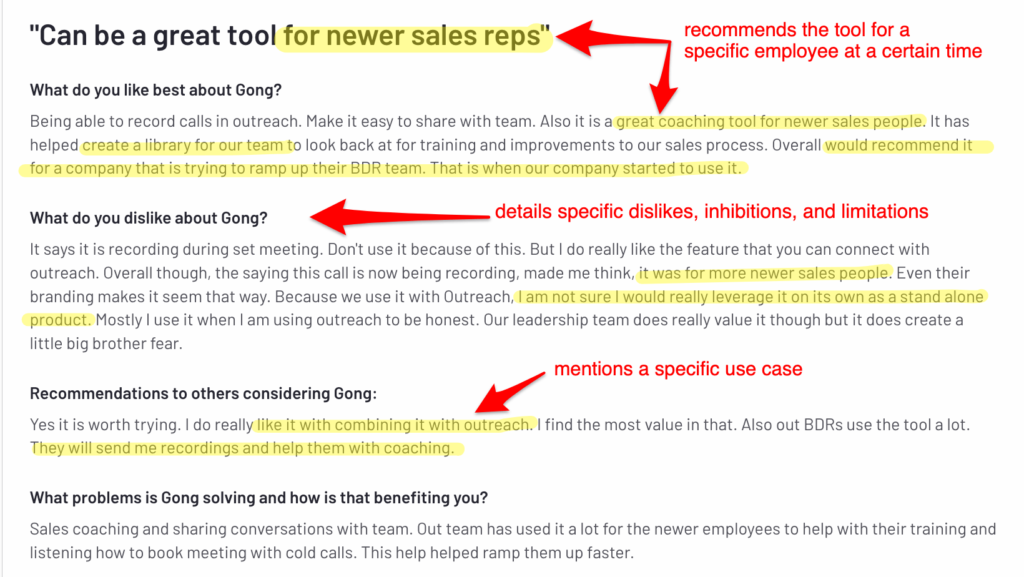

This is why you need to read the whole review and check if it’s specific, context-driven, and listing both pros & cons. For example, this review for sales software, Gong, mentions information about Gong’s various use cases for the company, what the tool wouldn’t be great for, and which features the software could improve.

Tons of reviews are monosyllabic one-sentence responses. Their insights will be nowhere as helpful as the reviews that are comprehensive and specific. Besides, some of these one-liner reviews are done by people out of formality to get the CS team off their back or by a happy user cherry-picked to raise review site ratings.

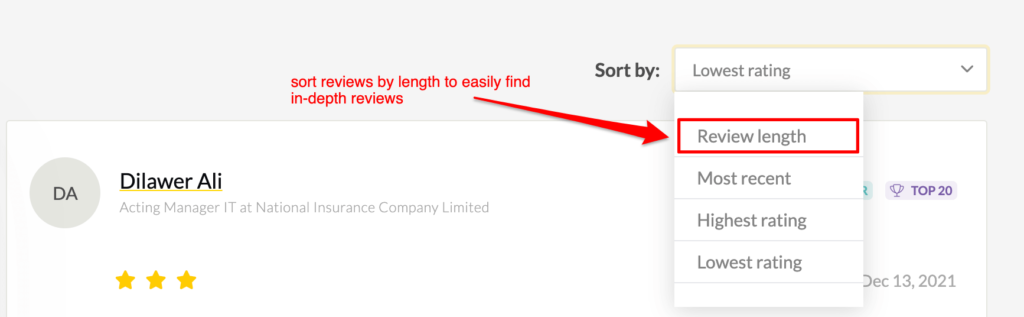

Some sites, like PeerSpot, make it easier by having a filter to sort reviews by length.

If you want to take it even further, you can use the sentiment analysis approach followed by Debbie Chew, Global SEO Manager at Dialpad. It’s especially reliable for purchasing expensive software with yearly contracts.

“I use sentiment analysis to categorize reviews as positive, negative, or neutral based on the overall sentiment expressed. I also apply natural language processing (NLP) to extract specific keywords and phrases that indicate the reasons behind the sentiment. For instance, I look for mentions of product features, customer service experiences, or delivery issues. Then, I assign a weight to each of these keywords based on their importance in the context of the product or service. This allows me to identify not only whether a review is positive or negative but also the key factors contributing to that sentiment.”

The bottom line: Pay extra attention to reviews with specific details about the tool, the reviewer’s company, and how the company uses the software. Find the good, the bad, and the ugly. The most helpful reviews also go beyond the tool and discuss the support team’s competence, ROI for the price, and product adoption.

3: Search for features that are important to you

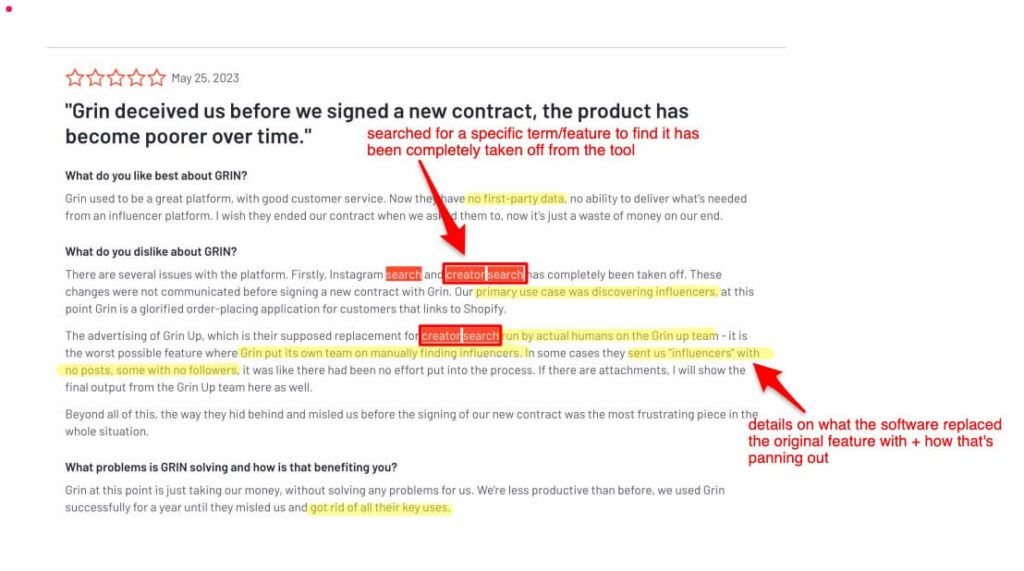

When you are review mining as a buyer for a tool, specific features are crucial for you. Let’s say you’re debating whether to purchase influencer discovery tools like GRIN to find and evaluate creators quickly.

Now, influencer marketing tools come with a host of other features, but you know “finding new influencers” is the core problem you’re looking to solve. So, search for keywords related to this problem in the reviews to see what buyers say about this feature in GRIN.

I searched for “creator search” to find that the tool has changed — and the native ability to search for creators is now only present for a few platforms.

As a marketer, you can drop GRIN out of your evaluation list because it doesn’t have the one core feature you’re looking for.

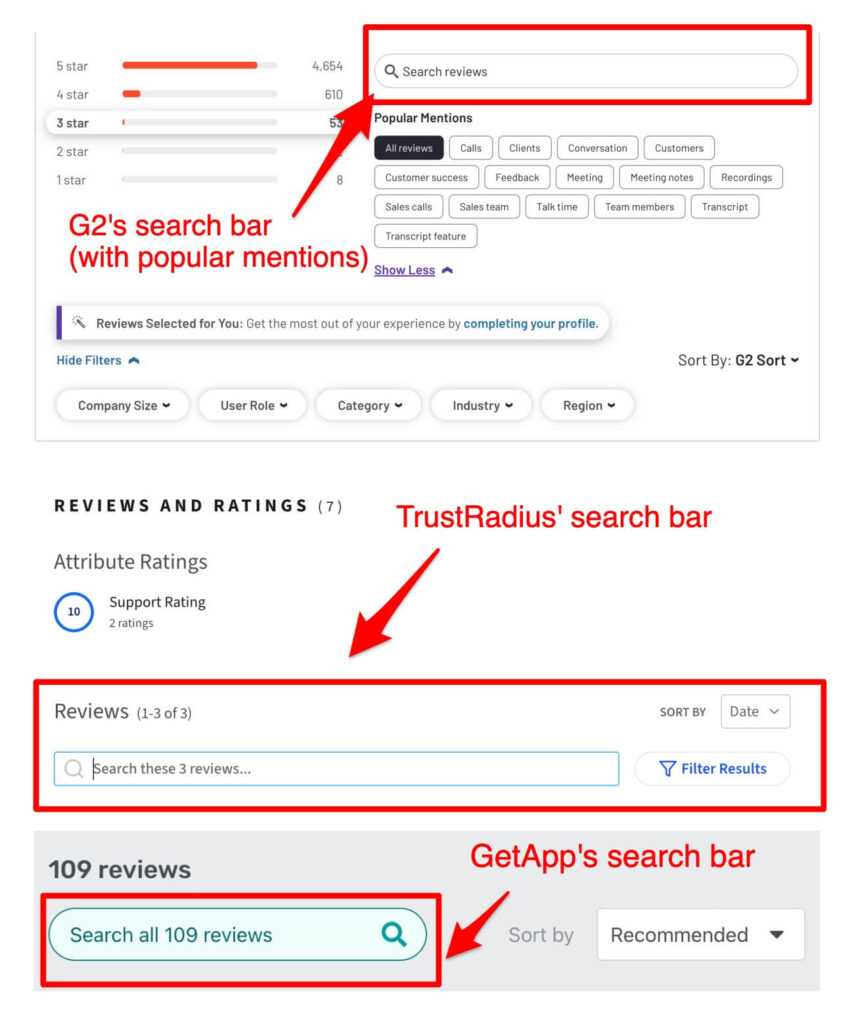

Almost all review sites have a search bar to make it easy for you to search for any and all keywords you like. Some sites, like G2, also suggest popular keywords.

⚡ Pro-tip: Try various permutations and combinations of the primary keywords for every feature. For the above example, I searched for “influencer discovery” and found it flooded with positive reviews. I tweaked it to “creator search” and found the up-to-date info.

4: Spot patterns across reviews

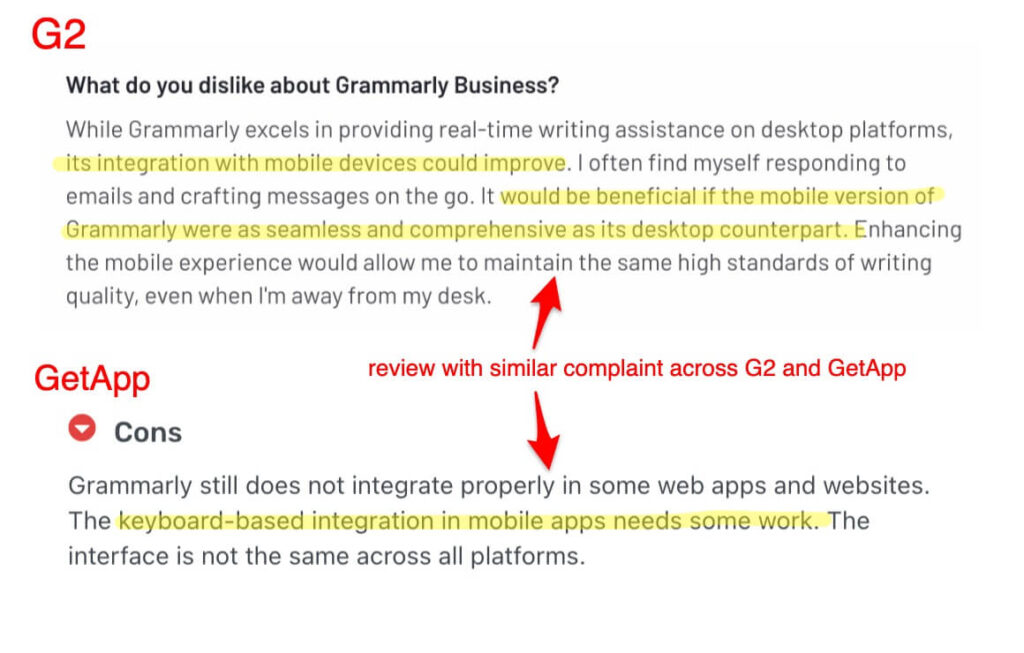

Looking for commonalities among various reviews is the easiest way to be well-informed about the strengths and weaknesses of any software.

Why are patterns trustworthy? Because they’re spread out across review sites, timelines, and users. It’s impossible to fake or game that for any company.

5: Be aware of each review site’s scoring methodology

Have you ever wondered how review sites decide the order in which they list vendors in a category? Each review site uses a different approach:

- On TrustRadius, it’s review count — i.e., which vendors have the most reviews (though it has a shortlist of “top rated” products in a mini section that precedes the main rankings.)

- On G2 and PeerSpot, it’s their respective algorithmic scores — i.e. which review rating scores the most.

- On Gartner Digital Markets (Capterra, GetApp, and SoftwareAdvice), it’s sponsored — i.e., who’s bidding the most per click to rank highest.

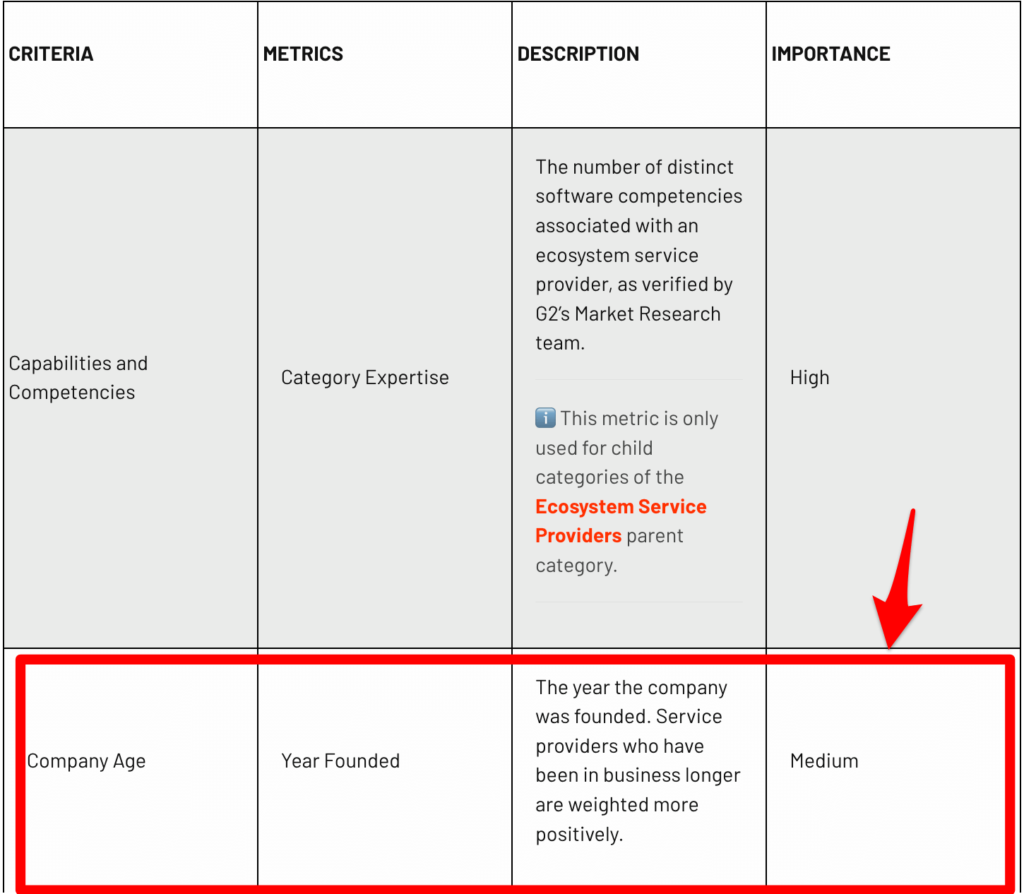

It helps to know how these review sites are calculating ratings and handing out accolades. For example, G2’s scoring method gives “year the company was founded” a medium-level importance in Service Scoring. If this metric doesn’t factor into your decision, the Service Scoring by G2 is futile for you to weigh.

Every software site — like G2, Capterra, or TrustRadius — has a public scoring methodology available on their website. Read these documents to understand how any review site gives scores and ratings. If it aligns with the factors you care about, great. If not, don’t take that particular number or rating as a factor in your purchasing decision.

⚡ Pro-tip: Run a quality assessment for each review you evaluate. Try to understand which factors a reviewer is assessing to provide their ratings.

- Are they giving a low rating because a product is difficult to use, but helpful once trained upon?

- Are they giving a high rating because a software’s customer support is wonderful, but the tool itself isn’t that great?

Different reviewers might give varying ratings on a plethora of factors — that are important to them. You should be aware of the rating methodology and the rationale a reviewer has used to evaluate a software more accurately.

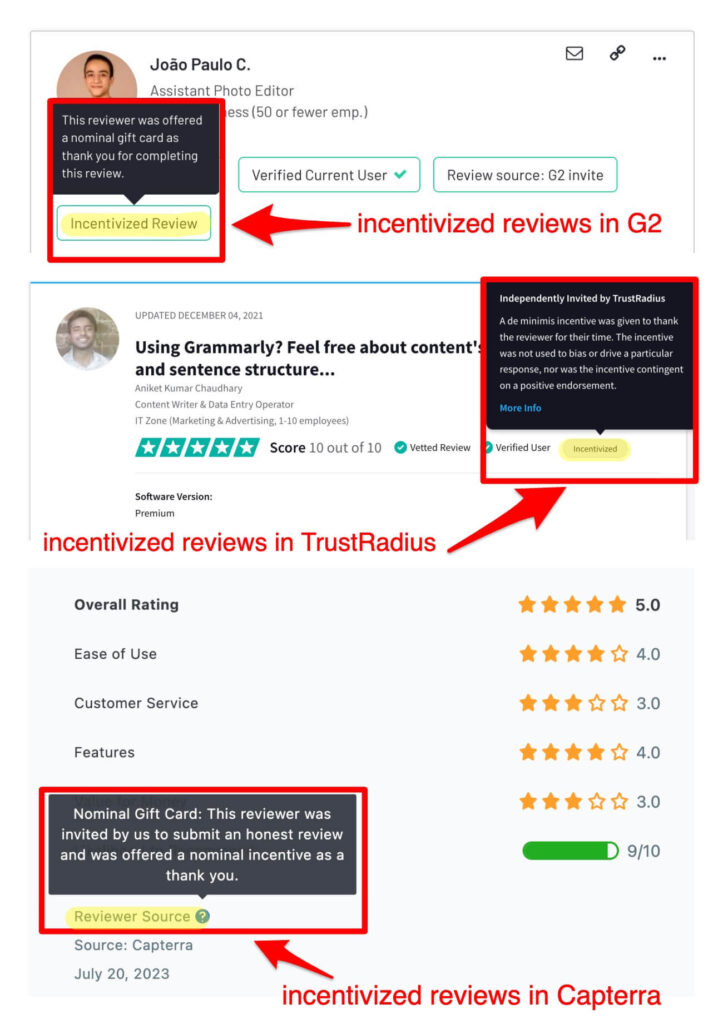

6: Check if the vendor has incentivized a review

Both vendors and review sites offer customers a monetary incentive in exchange for a review. You can spot these incentivized reviews with the label “incentivized” on almost every review site.

It’s simple enough to ignore reviews incentivized by vendors themselves. They want a review, they pay or request customers to get reviews, so it’s likely going to be leaning toward the positive side. Even when a vendor asks a customer for an organic review, they’ll probably introduce selection bias by cherry-picking customers who already like their product and rate them well in NPS surveys.

While you have to watch out for reviews incentivized by the vendor, you don’t have to worry about reviews incentivized by review sites. Why? Because review sites actually work toward balancing opinions in their incentivized reviews. For example, TrustRadius sources members from their community to provide honest, unfiltered opinions.

⚠️ Remember: Who the incentive is coming from is crucial. Software review sites have robust mechanisms in place to validate a buyer (like asking for screenshots from inside the product) and work toward transparency and unbiased reviews.

7: Scour through reviews from multiple sources

Don’t rely on only one review site to gain all your information about a software. Different websites have different scoring methodologies, users, and details — gobble them all to develop an unbiased perspective on a tool.

Ryan Prior, Head of Marketing at Modash, says he rarely relies on review sites alone to dictate his purchase decisions:

“Rather than specific review sites, I rely more on my overall brand perception (the sum of all the things I’ve seen about them on LinkedIn etc.), dark social, and their website.”

Like Ryan, you can also search for reviews and opinions on a software beyond review sites. Some places you can look into are:

- Blogs

- Social media

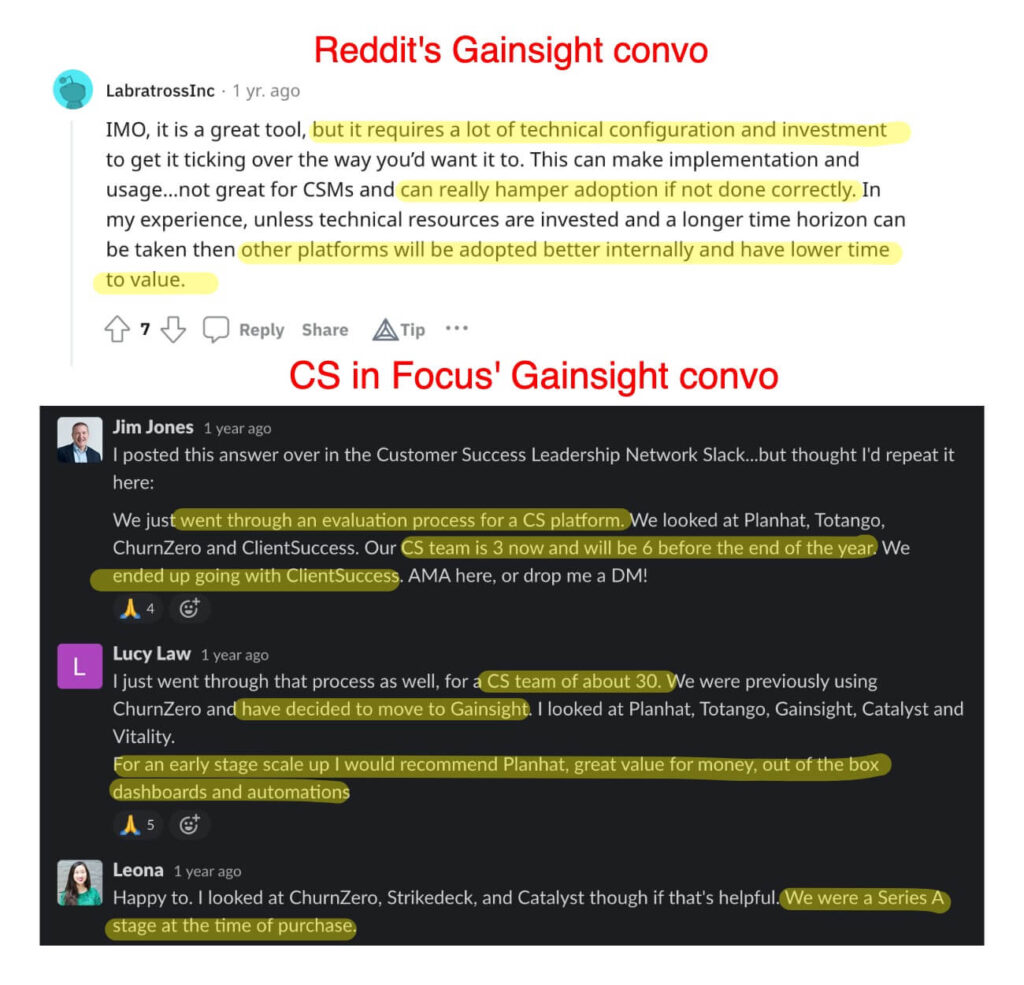

- Niche communities

The more sources you look into, the closer you get to the real voice of a software’s customer. For example, for the CSM tool, Gainsight, you’ll find better reviews and conversations in Reddit, Slack groups like CS in Focus, and blogs like customerfacing.io.

Beyond review sites, you can also ask to speak 1:1 to someone who has used the tool already and ask them your questions. It elevates passively reading reviews to actively asking follow-up questions.

No review is 100% objective

While all the above tips can help to weed out biased reviews and use the helpful ones, keep in mind that no review is completely objective.

That’s why it helps to rummage through reviews from many places to minimize biases as much as possible.

Wondering which website you should go to check customer reviews? Here are the ten best software review sites.